Coordinated Response

Services and tools for incident response management

Highlights

What Does NIST Say About Incident Response?

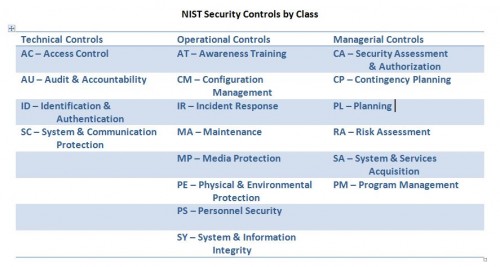

The National Institute of Standards and Technology (NIST) produced a list of Security Controls for Federal Information Systems. Incident Response is one of the controls.

In Special Publication 800-53, revision 3 NIST included Incident Response as 1 of 18 families of security controls. For the complete list see the end of this item. Much of the material is useful beyond the Federal government.

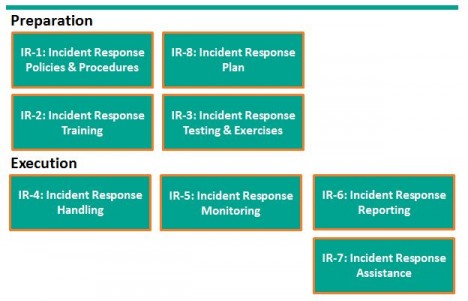

First, NIST provides a useful framework for considering Incident Response (IR). Please don’t let the control numbers confuse things. I started with preparation, but NIST considers IR Planning as IR-8.

Incident Response preparation starts with Policies and Procedures and the preparation of an incident response plan. With the plan in hand, train users how to recognize and report incidents, train the response team how to organize and react. For some incidents, actually testing the plan with field exercises may be needed.

Incident response execution includes handling, monitoring, reporting, and possibly additional assistance from specialists. NIST suggests each organization consider these controls and determine when or whether they apply.

In SP800-53, Revision 4 Draft, two additional controls were identified: IR-9 Information Spoilage Response and IR-10 Integrated Information Security Cell. The first addresses the additional needs for damaged or leaked information. The latter describes the organization of a specialized element of the Incident Response Team.

While the Incident Response Plan is just one of 8 controls, it touches all the other controls. NIST declares a good Incident Response Plan:

- Provides road map for implementing incident response capability.

- Describes the structure and organization of the incident response capability.

- Provides a high-level approach for how the incident response capability that fits into the overall organization.

- Meets the unique requirements of the organization, which relate to mission, size, structure, and functions.

- Identifies reportable incidents.

- Provides metrics for measuring the incident response capability within the organization.

- Defines the resources and management support needed to effectively maintain and mature an incident response capability.

- Is reviewed and approved at an appropriate level.

I heartily agree with NIST’s declaration.

NIST Security Controls

Here is the complete list of security control families identified in SP 800-53 and organized by the three control classes: Technical, Operational, and Managerial.

Raising the Bar on Cybersecurity

Last week President Obama issued an Executive Order on Improving Critical Infrastructure Cybersecurity and a Presidential Policy Directive — Critical Infrastructure Security and Resilience (PPD 21). Unfortunately, these are a watered down rehash of the previous administrations’ proposals. Without legislation, these are directives to the executive branch and mere suggestions to industry.

What I found interesting was the report, Raising the Bar on Cybersecurity, released by the Center for Strategic and International Studies. The report identified risk reduction measures that reduced the risk from attacks by 85%:

- Whitelisting – only allowing authorized software to run on a computer.

- Very rapid patching for operating systems and programs.

- Limiting administrative privileges to a minimal number of users.

I agree. These measures are effective. If these controls had been in place at Department of Energy, they may have prevented the intrusion and subsequent loss of data. But, first I would add two additional controls to this list:

- Eliminate outdated hardware and software.

- Perform annual attack & penetration (A&P) testing.

But, there are challenges.

Whitelisting is time consuming and can be difficult to implement. You need locked-down desktops, standards for server builds, and network configuration profiles already in place. Otherwise, whitelisting may be circumvented, especially by determined outside attackers and disgruntled internal users.

Patching is always a good thing, but testing is required before any patch should be applied. This is particularly challenging for industrial control systems associated with critical infrastructure.

Reducing administrative privileges is a good idea that is noted in most InfoSec improvement programs. It is one of the first thing covered by a security auditor’s review. A tool like Unix’s sudo is a good way to assign specific privileges to specific users. Versions of, or equivalents to, sudo are available for other operating systems

Often outdated software or hardware is the source of vulnerabilities as patches and updates are no longer provided. Unfortunately, some entities do not have the resources to eliminate old software and hardware so they must identify alternate means to provide adequate security.

Finally, annual A&P testing may be expensive, but for organizations at risk — especially for loss of intellectual property, exposure of personally identifiable information (PII) or at risk of taking a hit to corporate reputation – the expense is well justified. A&P testing performed by qualified third-parties is a “no-harm, no-foul” way of ensuring networks and systems are properly protected and will show that a modicum of due diligence has been performed which may help forestall or lessen the impact of a law suit following a breach.

So, why doesn’t every organization jump on the A&P testing bandwagon? Well, in addition to the expense side of the equation, some IT directors are reticent to employ A&P testing because of the risk of exposing poor network design and/or configuration errors to executives or the board of directors. These folks, however, should be thinking of how they can protect their shareholders’ interests and not their careers.

Implementing these five processes will not only raise the bar with respect to protecting organizational assets, but will also reduce the efforts required to maintain an efficient and effective IT environment.

Department of Energy Hack Raises Issues

The Washington Free Beacon reported on 2/4/2013, “Computer networks at the Energy Department were attacked by sophisticated hackers in a major cyber incident two weeks ago and personal information on several hundred employees was compromised by the intruders”. A total of 14 computer servers and 20 workstations at the headquarters were penetrated during the attack.

This article and other articles in the recent past all raise the same issue: inadequate security measures stemming from (pick one or more): improperly trained administrators, inexperienced security staff, budgetary constraints, and/or “institutional hubris”. Government has a responsibility to protect the information entrusted to it by its citizens. However, the government – all branches – has failed in this endeavor and will likely continue to fail until they wake up to reality and get smarter than those attempting to compromise their systems.

Mandatory security testing and training must be implemented at all levels of IT and operations throughout the government. If sensitive information is involved, training must be held. I am not talking about awareness training; I am talking about training the administrators, IT managers and security staff on what to look for, how to properly program and configure and, most importantly, how to test systems and how to properly conduct attack and penetration tests.

Do not rely on hiring people with long strings of certifications behind their names. In many cases, they are merely cert collectors who have no clue as to what the certs really mean – other than the more certs you have the better chances of getting a job. Establish real training programs. Work with groups such as the GIAC (Global Information Assurance Credentials) which has programs that REQUIRE a practical exercise before a cert can be awarded. NSA relies on GIAC certified individuals, why shouldn’t the rest of government?

Finally, forget sending trained staff away to conferences. Not only will the conferences be a waste of time – it seems only controversial, contrarian views are desired for talk topics these days – but you will leave your networks and systems in the hands of those not as qualified to deal with crises should the inevitable happen. Everyone likes to go to conferences (if for no other reason than to collect suitcases full of vendor-supplied swag) but the best bet on training spending is on real training as supplied by organizations such as the SANS Institute.

CSO magazine analyzing this story provided a number of sources that support the same conclusion.

New York Times Hacked Again

The New York Times incident response and incident handling provides examples of best practices, as well as, lessons learned.

According to the New York Times, Hackers in China Attacked the Times for Four Months. The Times Incident Response Plan provides examples of best practices as well as lessons learned.

|

|

And the most important best practice is to make security improvements based on the postmortem and lessons learned

In the case of the New York Times, the hackers were able to crack the password for every Times employee. Then the hackers used the passwords to gain access to the personal computers of 53 employees. The attack was discovered on October 25. The investigation placed the initial compromise on or around September 13 or 6 weeks earlier.

First, the Times engaged their Internet Service Provider (ISP), AT&T, to watch for unusual activity as part of AT&T’s intrusion detection protocol. The Times received an alert from AT&T that coincided with the publication of a story on the Chinese Prime Minister’s family wealth.

The New York Times briefed the Federal Bureau of Investigation (FBI). The FBI has jurisdiction over cybercrimes in the United States.

At the start of week 9, when the response team, even with AT&T assistance, was unable to eliminate the malicious code, the Times engaged Mandiant, a firm specializing in cybersecurity breaches. This is both a lesson learned and a best practice. Expect to need additional help when a significant incident occurs, but it’s best to engage the specialists when the response plan is first developed.

The response team identified 45 pieces of malware that provided the intruders with an extensive tool set to extract information. Virus protection only identified 1 piece. This is an important lesson. Advanced or enhanced malware may be difficult to detect. Defense in depth requires added security controls. In this case, monitoring provided the alert.

At this point, the response team “allowed hackers to spin a digital web for four months to identify every digital back door the hackers used”. This allowed the Times to (1) fully eradicate the intruders, (2) determine the extent of the intrusion and data extraction, and (3) build improved defenses for the future. Again, this is a best practice – investigation precedes eradication.

I found the work habits of the hackers particularly interesting. They worked a standard day starting at 8 A.M. Beijing time. Occasionally they worked as late as mid-night including November 6, election night.

Five Failures

I recently read an article on Computerworld.com titled Unseen Cyber War that, in my mind, was more about spreading fear, uncertainty and doubt (FUD) than it was about objective reporting. The focus should have been more about solving problems rather than wringing of hands, gnashing of teeth and creating unreasonable angst and concern about vulnerabilities that should have been fixed or at a minimum, mitigated.

While the threats are real, this type of FUD does not help. The real problem is fivefold:

1. Failure to deliver secure products by hardware and software vendors. Software riddled with bugs and poor programming techniques and hardware configured in ‘open’ modes are recipes for disaster.

2. Failure to enforce security standards by IT departments on devices facing the Internet and to securely configure routers, servers and other networking and computing devices. Putting devices in service and connecting them to the Internet without changing default settings is insane.

3. Failure to properly allocate resources by executive management in government and private industry to train users on secure computing practices. CISOs are ready to train users, but until CIOs and CEOs understand the full ramifications of ignorant user-bases, problems such as phishing/spear phishing, drive-by malware and similar attacks will continue.

4. Failure of our education system to teach proper security techniques to students at all levels of the education ladder. Many children today are familiar with computers and tablets by the time they enter first grade. Teachers should prepare syllabi aimed at appropriate grade levels that teach both security and ethics. Until this training is institutionalized throughout the education life-cycle (primary, secondary and university levels) there will be problems.

5. Failure by parents to understand what their children are doing on the Internet. Being open and honest with children is the best way to make sure children and young adults listen to you. Make sure you let them know that accessing the Internet by any device — mobile or otherwise — is a privilege not a right. Parents should monitor use and let their kids know they are doing it. Don’t be sneaky about it; installing silent “net-nanny” software without telling the progeny that it’s there will just raises trust issues and the kids will just figure a way around it.

Fix these five elements and you will have less problems with hackers, crackers and other ne’er-do-wells.

A Focus on Incident Response

Coordinated Response is an incident response planning and consulting firm. We help you create an incident response plan, improve upon it, automate it, and manage it.

My focus, my interest, my expertise are in the area of incident response planning and management. Seasoned in business process management & automation, I have helped numerous organizations build business response management solutions. Working with my key advisers – Jim Bothe and John Walsh (see About Us – Leadership) – I began focusing on cybersecurity incident response management.

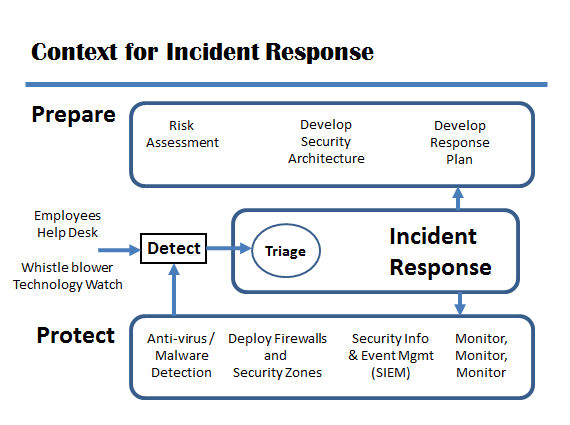

Where does response management fall in the realm of cybersecurity?

Most people asked about cybersecurity tools mention antivirus software or firewalls or intrusion detection systems. These important tools help to protect systems and information. But, if these tools fail, if an incident occurs, an organized, coordinated response to the incident is needed.

Other people think about the effort to prepare the security plan and architecture. Preparation starts with a risk assessment, includes the security architecture, but should include an incident response plan. To respond to an incident without a well thought out, well tested, well practiced plan increases the risk, the cost, and the overall impact of the incident.

Some organizations might consider any activity performed by the Computer Security Incident Response Team or CSIRT as incident response, but many CSIRT activities are pro-active. The CSIRT may participate in preparation and protection as part of incident management, not just the narrower incident response. In addition, legal, human resources, security may also participate as extended team members of the CSIRT.

The diagram above is based on one in the publication Defining Incident Management Processes for CSIRTs: A Work in Progress, Carnegie Mellon University / Software Engineering Institute, May, 2004. The publication, Technical Report CMU/SEI-2004-TR-015, is available at http://www.sei.cmu.edu/library/abstracts/reports/04tr015.cfm. The authors provide both insight and useful guidance. Be not fooled by its publication date or the tag line “a work in progress”.